regularization machine learning meaning

However as l1 regularization can lead to model sparsity meaning some weights are zero this can be helpful. The regularization parameter in machine learning is λ and has the following features.

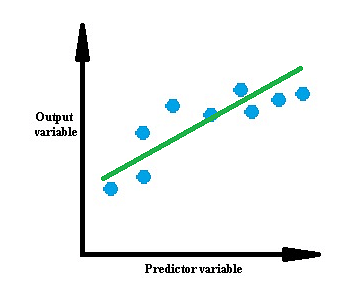

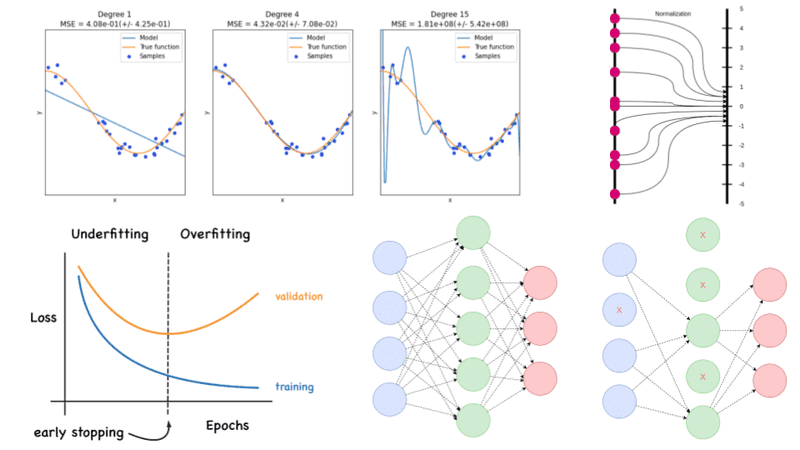

A Visual Explanation For Regularization Of Linear Models

L2 Machine Learning Regularization uses Ridge regression which is a model tuning method used for analyzing data with multicollinearity.

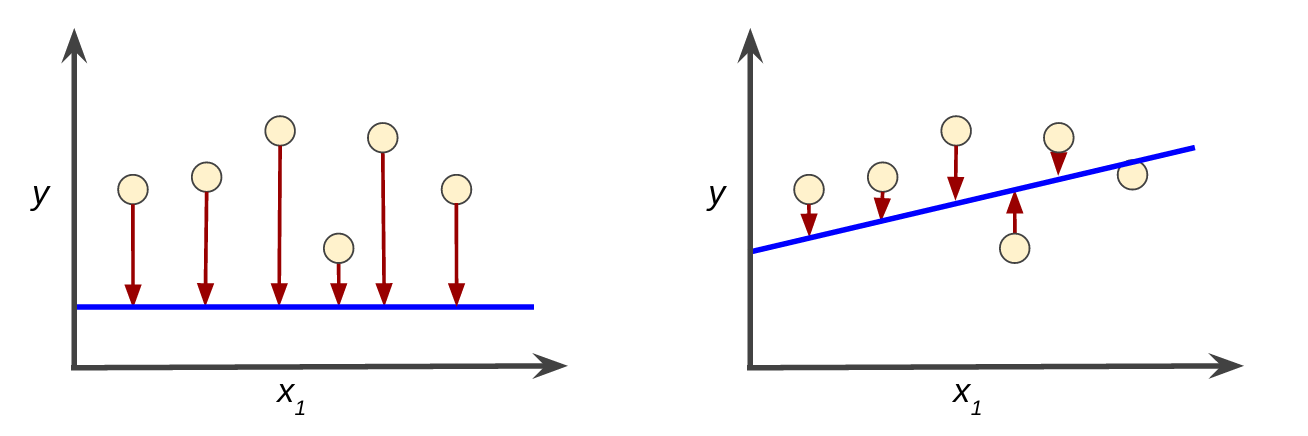

. It is a technique to prevent the model from overfitting by adding extra information to it. Regularization is one of the most important concepts of machine learning. A penalty or complexity term is added to the complex model during regularization.

Lets consider the simple linear regression equation. This is an important theme in machine learning. How Does Regularization Work.

L1 regularization It is another common form of regularization where. In the above equation L is any loss function and F denotes the Frobenius norm. Regularization means restricting a model to avoid overfitting by shrinking the coefficient estimates to zero.

Objective function with regularization. It reduces by ignoring. When a model suffers from overfitting we should control the.

Ridge Regularization Also known as Ridge Regression it adjusts models with overfitting or underfitting by adding a penalty equivalent to the sum of the squares of the. If weight for some attributefeature equals zero it means that the. This technique prevents the model from overfitting by adding extra information to it.

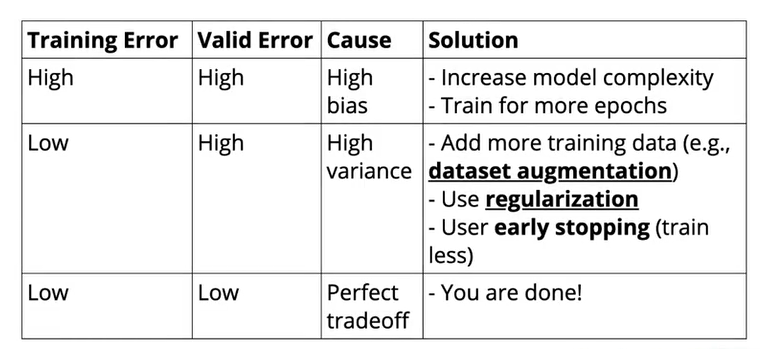

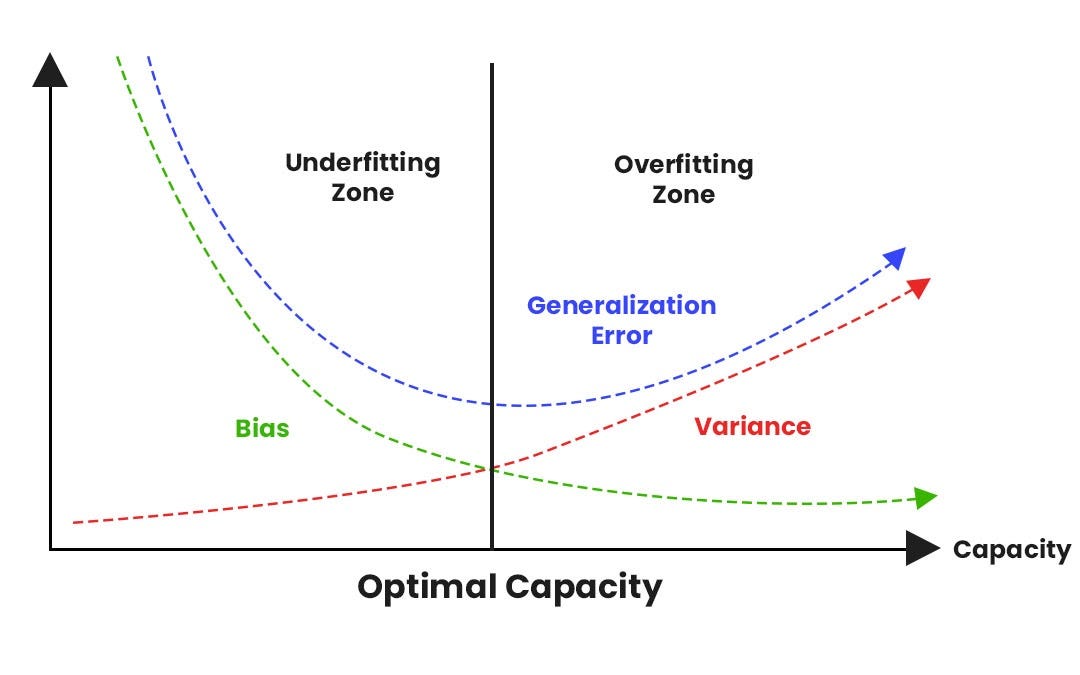

Regularization refers to the modifications that can be made to a learning algorithm that helps to reduce this generalization error and not the training error. While regularization is used with many. In machine learning the data term corresponds to the training data and the regularization is either the choice of the model or modifications to the algorithm.

It is one of the most important concepts of machine learning. It is always intended to reduce. What is Regularization.

In Lasso regression the model is. The concept of regularization is widely used even outside the machine learning domain. Solve an ill-posed problem a problem without a unique and stable solution Prevent model overfitting In machine learning.

Regularization is one of the techniques that is used to control overfitting in high flexibility models. Regularization methods add additional constraints to do two things. It tries to impose a higher penalty on the variable having higher values and hence it controls the.

In general regularization involves augmenting the input information to enforce generalization.

What Is Generalization In Machine Learning Deepai Space

Machine Learning How To Calculate The Regularization Parameter In Linear Regression Stack Overflow

Understanding Regularization In Plain Language L1 And L2 Regularization Regenerative

Machine Learning Regularization Explained Sprintzeal

Implementing Drop Out Regularization In Neural Networks Tech Quantum

Overfitting And Underfitting With Machine Learning Algorithms

Regularization An Overview Sciencedirect Topics

What Is Regularizaton In Machine Learning

What Is Regularizaton In Machine Learning

Introduction To Regularization Nick Ryan

Regularization Regularization Techniques In Machine Learning

What Is Regularization In Machine Learning The Freeman Online

Deep Learning Best Practices Regularization Techniques For Better Neural Network Performance By Niranjan Kumar Heartbeat

Descending Into Ml Training And Loss Machine Learning Google Developers

Regularization By Early Stopping Geeksforgeeks

Regularization An Important Concept In Machine Learning By Megha Mishra Towards Data Science

Machine Learning Regularization In Simple Math Explained Data Science Stack Exchange

Regularization Understanding L1 And L2 Regularization For Deep Learning By Ujwal Tewari Analytics Vidhya Medium

Regularization Techniques For Training Deep Neural Networks Ai Summer